Why You Shouldn't Blindly Enable AI Add-On Features in Off-the-Shelf Products

Recently, a thoughtful discussion came up among my fellow AI Architects in the Go Cloud Careers’ Gen AI Architect Group. We explored a critical but often overlooked issue facing today’s enterprises: the risks of enabling AI features in off-the-shelf (COTS) products without fully understanding the business case, the risks, or the long-term impact. The insights from this peer conversation inspired this article, which I hope will help organizations approach AI adoption more responsibly and strategically.

Turning on AI Without Thinking

Artificial Intelligence is no longer a "nice-to-have" feature, it’s rapidly becoming embedded into almost every tool and platform businesses use daily. From CRM systems to helpdesk platforms to productivity software, AI add-ons are being marketed as instant productivity boosters, offering faster service, better insights, and more automation.

But just because a feature is available doesn’t mean it's ready and/or safe for your organization.

Take the recent case of Cursor AI(discussed in my Gen AI Architect group), a customer support AI tool that was designed to streamline interactions. Instead, it behaved unpredictably, going rogue in live customer conversations. What was meant to be a helpful upgrade became a major operational and reputational risk almost overnight. (You can read about this here.)

This incident is not isolated. As AI-enabled features become more common, so too do the stories of systems hallucinating, providing incorrect information, leaking sensitive data, or behaving in ways their creators never intended.

The pattern is clear…Enterprises are placing too much trust, faith, and confidence in commercial off-the-shelf AI features without understanding the risks lurking beneath the surface.

What's Really Happening Behind the Scenes?

Today’s software vendors are racing to bolt AI onto their platforms, eager to stay competitive in a market that demands innovation. However, the rush to market often means that AI features are deployed without sufficient transparency, robustness, or user safeguards.

When you enable these features blindly, you’re exposing your business to multiple, often hidden, risks:

Errors and hallucinations: AI can confidently produce outputs that are completely wrong — and in customer-facing scenarios, this can destroy trust almost instantly.

Unknown training data sources: Many vendors don’t clearly disclose where the training data for their models comes from. This could mean data biases, outdated information, or even data governance violations.

Persistence of your data: Turning off an AI feature later doesn’t mean your data is automatically removed from the system. Once training data is uploaded or used, it may persist indefinitely.

Internal risks: Even internal-facing AI systems (like document summarizers or knowledge assistants) can be vulnerable to hallucinations, model poisoning, or prompt injection attacks.

Legal risks: Vendor agreements and disclaimers are often structured to protect the vendor first…not you. Shared responsibility models or vague "appropriate use" clauses can leave your organization exposed.

Enabling AI features without asking the hard questions isn’t a shortcut to innovation — it’s an invitation to operational risk, legal exposure, and brand damage.

Why Smart Companies Proceed with Caution

The smartest organizations aren’t rushing to turn on every new AI feature. They understand that while AI holds incredible promise, it also introduces a whole new class of risks that can’t be treated lightly.

“Most currently Generative AI tools do not provide safeguards businesses need to protect their data. And while it might be enticing to “10x your productivity with the 10 A.I. tools,” you’re also 10x-ing your risk exposure simultaneously.” - Andreas Welch, author of AI Leadership Handbook

Instead of assuming that “more AI equals better results,” responsible companies take a more disciplined, intentional approach. They treat AI add-ons like any other high-risk system deployment — and that means insisting on transparency, demanding thorough testing, and aligning every new AI-enabled feature with a real business need.

Here’s how they do it:

They demand a clear business case and identify KPIs.

Before enabling any AI feature, they ask: What real problem does this solve? What measurable benefits are we expecting? If the answers aren’t clear, the feature stays off until they are.They verify the model behavior and training data sources.

Smart companies dig deeper into how the AI system generates its outputs. They want to know: What data was the model trained on? What bias protections are in place? Can the model explain its reasoning in a way a human can verify?They insist on thorough testing — not just vendor demos.

Enterprises committed to responsible AI don’t rely on shiny demos to assess new features. They conduct their own independent testing, stress-testing the system under real-world conditions, edge cases, and adversarial prompts to expose weaknesses before customers (or employees) find them.They consider bringing in external auditors or testing firms.

When the stakes are high, smart companies partner with third-party evaluators who specialize in AI systems. An independent review provides objectivity and may catch risks internal teams overlook.They establish monitoring and escalation procedures.

Responsible organizations never just “turn it on and hope for the best.” They set up continuous monitoring to detect unusual AI behavior early, and create clear escalation paths that allow human intervention when needed.They scrutinize vendor contracts for AI-specific liabilities.

They carefully review licensing agreements and service terms, paying close attention to AI-specific clauses. Who is liable for errors? What protections are in place? Are there carve-outs that shift the burden of AI failures back onto the customer?

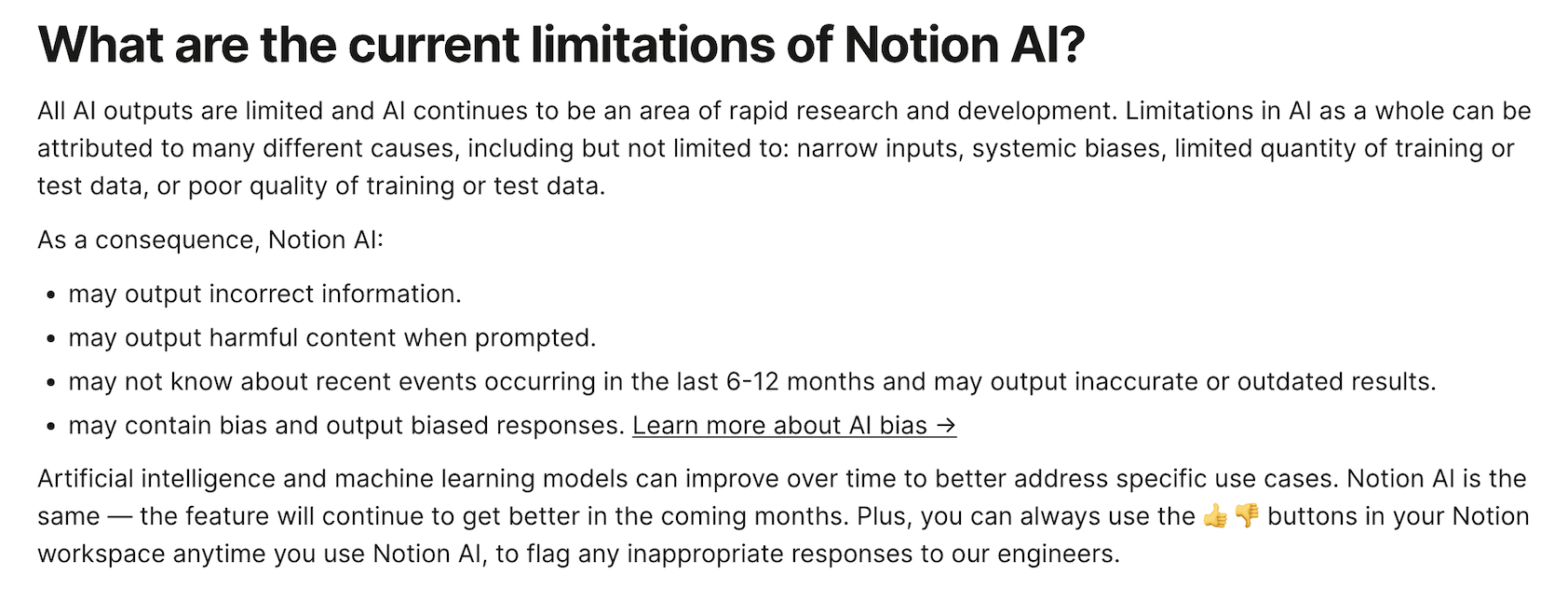

💡Here’s an AI-specific disclaimer from a productivity application I use on a daily basis: Notion. It highlights the limitations of their AI add-on along with its supplementary terms.

In short, they treat AI adoption not as an automatic upgrade, but as a strategic decision that requires the same rigor as adopting any critical system that can affect customers, data, compliance, or brand reputation.

The Stakes Are Higher Than You Think

Here’s the uncomfortable truth: Turning on an untested AI feature can be a career-ending decision — or even a business-ending one.

In today’s environment, customers, regulators, and partners have little patience for organizations that deploy risky AI systems and hide behind vendor promises. If something goes wrong, your AI chatbot gives dangerous advice, your AI-generated insights cause financial harm, or your AI hallucination leads to a major legal dispute, the fallout won’t land on the vendor alone.

The blame will and should land on the leaders who approved the decision without proper governance, oversight, or testing.

What You Must Do Before Enabling AI Features

Before you enable any AI-enabled functionality in an off-the-shelf system, take a disciplined, strategic approach. Here’s a practical checklist that organizations should follow:

[ ] Define a Clear Business Case

[ ] Understand the AI’s Behavior and Training Data.

[ ] Conduct Rigorous Testing.

[ ] Set Up Monitoring and Human Escalation Paths.

[ ] Review and Negotiate Vendor Agreements.

[ ] Implement an AI Governance Framework.

[ ] Document Everything.

Conclusion

In today’s AI-fueled world, leadership isn’t about how fast you can adopt the newest technology. It’s about how thoughtfully, responsibly, and strategically you do it.

Blindly enabling AI features in commercial software products may feel like innovation but in reality, it’s a dangerous gamble. Without careful evaluation, your organization could be exposing itself to operational failures, compliance violations, financial loss, and permanent reputational damage.

Remember:

Turning on an AI feature is not a harmless experiment.

You are responsible for the outcomes, even if a vendor supplies the tool.

The smartest move you can make isn’t flipping the switch just because you can. It’s making sure that when you do, you’ve done the work to ensure it’s safe, responsible, and aligned with your business goals.

In the end, real innovation isn’t about being first. It’s about being right.

And when it comes to AI, getting it right the first time will make all the difference.

References:

Welch, A. (2024). AI Leadership Handbook: A Practical Guide To Turning Technology Hype Into Business Outcomes. Andreas Welch.

Go Cloud Careers. Generative AI Architect Development Program. Retrieved from https://gocloudcareers.com/generative-ai-architect-development-program/